Navigating the AI Content Landscape: Understanding, Using, and Bypassing AI Content Detectors

The rapid advancement of artificial intelligence, particularly large language models (LLMs) like GPT, has revolutionized content creation. From marketing copy to academic papers, AI-generated text is becoming increasingly prevalent. While this offers unprecedented efficiency, it also raises critical questions about authenticity, originality, and potential misuse. This has led to the emergence of AI content detectors – sophisticated tools designed to identify whether a piece of text was written by a human or a machine.

Understanding these detectors, their underlying mechanisms, their limitations, and strategies for navigating their presence is crucial for anyone involved in content creation today. This comprehensive guide will delve deep into the world of AI content detection, offering insights into their operation, the challenges they pose, and practical approaches for both creators and consumers of digital content.

What Are AI Content Detectors and Why Are They Essential?

AI content detectors are advanced software tools that leverage machine learning algorithms to analyze textual data. Their primary function is to differentiate between content written by a human and content generated by artificial intelligence models. These tools have become indispensable in various sectors, from education (detecting AI-plagiarized assignments) to digital marketing (ensuring authentic brand voice) and journalism (verifying content origins).

As AI language models become more sophisticated and widely accessible, the ability to identify AI-generated text becomes increasingly vital. Without these tools, the internet could be flooded with machine-generated content, potentially blurring the lines of authorship, credibility, and even originality. However, it’s essential to recognize that while powerful, these detectors are not infallible. They come with inherent limits, and relying solely on their verdicts can lead to misjudgments and false positives.

The goal of AI detectors is not to stifle innovation, but rather to promote transparency and maintain a high standard of human-centric content where it matters most. They serve as a gatekeeper, helping to ensure that the unique nuances, creativity, and personal touch inherent in human writing continue to be valued and recognized.

How Do AI Content Detectors, Like GPTZero, Function?

At their core, AI content detectors operate by analyzing the statistical properties of text, trained on vast datasets of both human-written and AI-generated content. When an AI model generates text, it typically does so by predicting the most probable next word in a sequence, aiming for coherence and fluency. This predictive process, while impressive, often results in patterns that differ subtly yet significantly from human writing.

The Role of Probability, Perplexity, and Burstiness

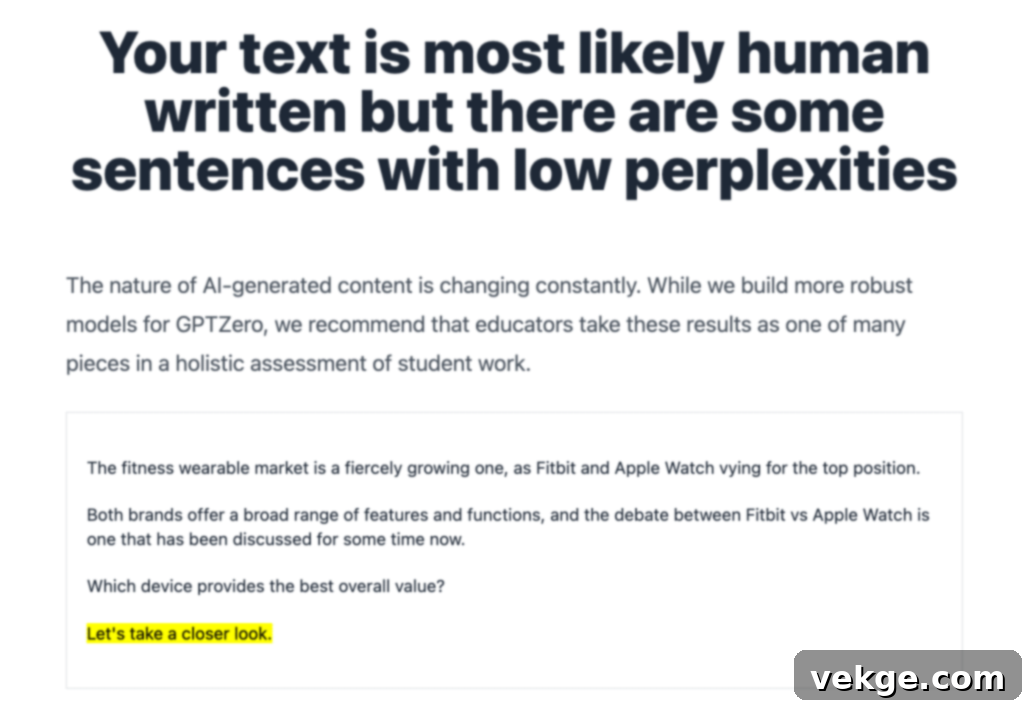

Most content detectors, including tools like GPTZero, primarily focus on metrics such as probability, perplexity, and burstiness to evaluate text:

- Probability: AI models are trained on immense amounts of data to predict which words are most likely to appear next in a given context. AI-generated text often leans heavily on high-probability words and phrases, making it statistically more predictable. Content detectors identify these patterns.

- Perplexity: This metric measures how “surprised” a language model is by a sequence of words. A text with low perplexity is highly predictable and aligns well with the statistical patterns the model has learned. Conversely, human-written text often exhibits higher perplexity because it tends to be less predictable, incorporating unexpected phrasing, creative word choices, and more varied sentence structures. AI detectors look for consistently low perplexity as a sign of machine authorship.

- Burstiness: Human writing is characterized by its natural variation in sentence length and structure. We tend to mix long, complex sentences with short, direct ones. This “burstiness” makes our text dynamic and engaging. AI models, on the other hand, often produce text with more uniform sentence lengths and structures, leading to lower burstiness. Detectors analyze this consistency to flag AI-generated content.

When a detector analyzes text, it calculates these scores. If the combination of high probability, low perplexity, and low burstiness crosses certain thresholds, the text is flagged as potentially AI-generated. While this methodology is powerful, it’s not foolproof. The lines between human and machine writing are becoming increasingly blurred as AI models become more sophisticated, capable of generating text that mimics human unpredictability.

Strategies for Bypassing AI Content Detectors

While the ethical implications of intentionally bypassing AI detectors are a subject of ongoing debate, there are situations where creators might need to ensure their human-written content isn’t falsely flagged. This could be due to a unique writing style that resembles AI patterns, or for legitimate personal and professional reasons where content needs to pass scrutiny. It’s crucial to understand that “bypassing” often means making AI-generated text appear more human, or ensuring human text doesn’t appear machine-like.

Making AI-Generated Content Appear More Human

If you’ve used AI as a drafting tool and want to “humanize” the output, consider these techniques:

- Inject Personal Anecdotes and Opinions: AI struggles to generate genuine personal experiences or strong, nuanced opinions. Weave in your own stories, perspectives, and biases. This immediately boosts the “human factor.”

- Vary Sentence Structure and Length (Increase Burstiness): Actively rewrite sentences to introduce more variety. Combine short, punchy sentences with longer, more complex ones. Break up predictable patterns.

- Introduce Idiosyncrasies and Imperfections: Human writing isn’t always perfectly grammatically correct or polished. Occasionally, a conversational tone, a deliberate run-on sentence, or an unusual phrasing can signal human authorship. However, use this judiciously to maintain readability and professionalism.

- Utilize Figurative Language: Metaphors, similes, idioms, and humor are often used differently by humans than by AI. Incorporate these naturally to add depth and unique expression.

- Challenge the Obvious: Instead of simply stating facts, offer a unique interpretation or question a common assumption. Human writers often explore nuances that AI might overlook.

- Consciously Use Lower Probability Words: As mentioned, AI often defaults to high-probability words. One strategy is to swap some of these with less common but still appropriate synonyms. This can make the text less predictable for the detector, as illustrated in the table below:

| Original Word (High Probability) | Original Probability | Replaced Word (Lower Probability) | Replaced Word’s Probability |

|---|---|---|---|

| Competitive | 99.90% | Growing | 72.01% |

| You can also find out more about | 95.03% | You can also read about the advantages of using | 65.87% |

| Spot | 99.28% | Position | 57.89% |

| Companies | 85.62% | Brands | 63.05% |

| Wide | 96.83% | Broad | 81.09% |

| Raging | 89.26% | Discussed | 54.01% |

| Experience the Difference | 96.19% | Value | 45.77% |

While changing synonyms to lower probability words can reduce the likelihood of detection, it’s a delicate balance. The goal is to make the content less predictable without sacrificing readability, clarity, or the intended message. Overdoing it can make your content sound awkward or unnatural, defeating the purpose.

Why You Shouldn’t Rely Solely on AI Content Detectors

AI content detectors are valuable tools, but their limitations are significant and must be understood. Relying on them as the sole arbiter of content authenticity can lead to serious issues, including false accusations and the misidentification of genuinely human-written content.

The Problem of False Positives

One of the most critical issues is the phenomenon of false positives. This occurs when a detector flags human-written text as AI-generated. Several factors contribute to this:

- Predictable Human Writing Styles: Some human writers naturally adopt a very clear, concise, and structured style, especially in technical documentation, academic papers, or formal reports. This can inadvertently mimic the low perplexity and burstiness often associated with AI.

- Non-Native English Speakers: Individuals who are not native English speakers might write in a more simplistic, direct, or grammatically precise manner to avoid errors. This can also trigger false positives from detectors that interpret such patterns as machine-like.

- Training Data Bias: If an AI detector’s training data heavily features a specific style of human writing (e.g., highly creative, informal), it might be more prone to misidentifying other valid human writing styles as AI-generated.

- Evolving AI Capabilities: As AI models rapidly advance, they are becoming increasingly adept at mimicking human nuances. This creates an “arms race” where detectors constantly struggle to keep up, leading to a higher chance of sophisticated AI output slipping through or, conversely, human text being over-flagged as AI-like.

- Lack of Context and Nuance: Detectors analyze text purely on statistical patterns, lacking the ability to understand the deeper context, intent, or creative spark behind a piece of writing. They cannot discern sarcasm, irony, personal experience, or deep analytical thought in the same way a human reader can.

These limitations highlight the fact that AI detectors should be used as a supplementary tool, a signal for further human investigation, rather than a definitive judge of content origin. Misidentifying human content as AI-generated can be damaging to creators’ reputations and can stifle genuine creativity.

Alternative Solutions to AI Content Detection for Authenticity

Given the inherent limitations of AI content detectors, a multi-faceted approach is necessary to ensure the authenticity and quality of content in the digital age. This involves a blend of human judgment, ethical practices, and a critical mindset.

- Cultivate Critical Human Judgment: The most powerful “detector” remains the human mind. Journalists, content producers, educators, and general readers should hone their critical thinking skills. Look for signs of genuine insight, original thought, nuanced arguments, personal voice, and unique perspectives that AI often struggles to replicate. Does the content sound generic, or does it offer something truly fresh?

- Manual Content Review and Comparison: When in doubt, a thorough manual review is indispensable. Compare the suspected text with known examples of both human-generated and AI-generated content. Does it align with the author’s known style? Does it contain an unusual lack of errors or an excessive use of common phrases? Does it feel “soulless” or deeply engaging?

- Consult Experts in AI and NLP: For high-stakes situations, consulting experts in artificial intelligence and natural language processing can provide deeper insights. These professionals understand the capabilities and limitations of current AI models and can offer more informed assessments.

- Transparency and Disclosure: One of the most ethical and effective solutions is transparency. If AI tools were used in the content creation process (e.g., for brainstorming, drafting, or editing), clearly disclose this to your audience. This empowers readers to make informed decisions about how they engage with the content and fosters trust. Labeling content as “AI-assisted” or “AI-generated” allows for a more honest digital ecosystem.

- Source Verification and Fact-Checking: Regardless of whether content is human or AI-generated, robust fact-checking and source verification are paramount. AI can hallucinate information, so always cross-reference claims, statistics, and citations with reliable sources.

- Focus on Value and Originality: Ultimately, high-quality, original content that provides genuine value, offers unique perspectives, and demonstrates deep understanding will always stand out. Content creators should prioritize these aspects, as they are inherently more difficult for AI to replicate convincingly. Developing a strong, identifiable human voice and style is the best defense against being mistaken for a machine.

By embracing these alternative strategies, we can move beyond simply detecting AI and instead foster an environment that values authentic creation, critical engagement, and responsible technological integration.

Conclusion: Navigating the Future of Content with AI

AI content detectors represent a critical response to the rise of advanced language models. They are undeniably useful tools for recognizing potentially AI-generated text, acting as an early warning system in a rapidly evolving digital landscape. However, it is crucial to acknowledge their inherent imperfections and avoid relying on them as the sole arbiters of content authenticity.

As AI language models continue to advance at an astonishing pace, capable of generating increasingly human-like text, it is essential that both content creators and consumers maintain an open, adaptable, and flexible mindset. The “arms race” between AI generation and detection is ongoing, and what works today might be obsolete tomorrow.

Understanding the strengths and, more importantly, the limitations of AI content detection tools will allow us to make more informed and balanced decisions about their use. It encourages a more nuanced approach to content evaluation, one that prioritizes critical human judgment, ethical transparency, and a deep appreciation for the unique qualities of human authorship.

Our ultimate aim should be to foster an environment that promotes equitable and high-quality content creation and distribution. This future envision a collaborative space where AI language models serve as powerful assistants and catalysts for creativity, coexisting alongside human authors for mutual gain, rather than replacing them entirely. By embracing technology responsibly and upholding the value of human intellect and expression, we can navigate the complexities of AI-generated content and ensure a rich, authentic digital future.